Muhammad Usama Saleem

Ph.D. Student

University of North Carolina at Charlotte

About me

I am a Ph.D. candidate in Computer Science at the University of North Carolina at Charlotte, supervised by

Dr. Pu Wang

in the GENIUS Lab. In industry, I work as a researcher with the Multimodal GenAI teams at

Amazon and

Amazon and

Lowe’s where I am developing large-scale, multimodal language models (MLLMs) to enhance operational efficiency and customer experience in complex, real-world environments. Moreover, I joined

Lowe’s where I am developing large-scale, multimodal language models (MLLMs) to enhance operational efficiency and customer experience in complex, real-world environments. Moreover, I joined

Google as a Research Scientist Intern in the Extended Reality (AR/VR) team, working on advancing multimodal and generative AI for immersive technologies.

Google as a Research Scientist Intern in the Extended Reality (AR/VR) team, working on advancing multimodal and generative AI for immersive technologies.

Research Interests

My research focus on building multimodal foundation models that unify real-time perception with high-fidelity synthesis. I aim to develop context-aware AI systems capable of perceiving, reconstructing, and interacting with complex human behavior across both physical and virtual environments. My work centers on multimodal motion synthesis frameworks that enable controllable, high-quality 3D human animation for real-time applications , as well as 3D human pose estimation and mesh reconstruction using generative masked modeling. Ultimately, I seek to leverage these generative foundations to create AI systems that can both understand human behavior in the physical world and synthesize interactive digital counterparts within immersive XR environments.

If you have any research opportunities or open positions, please feel free to reach out at msaleem2@charlotte.edu .News

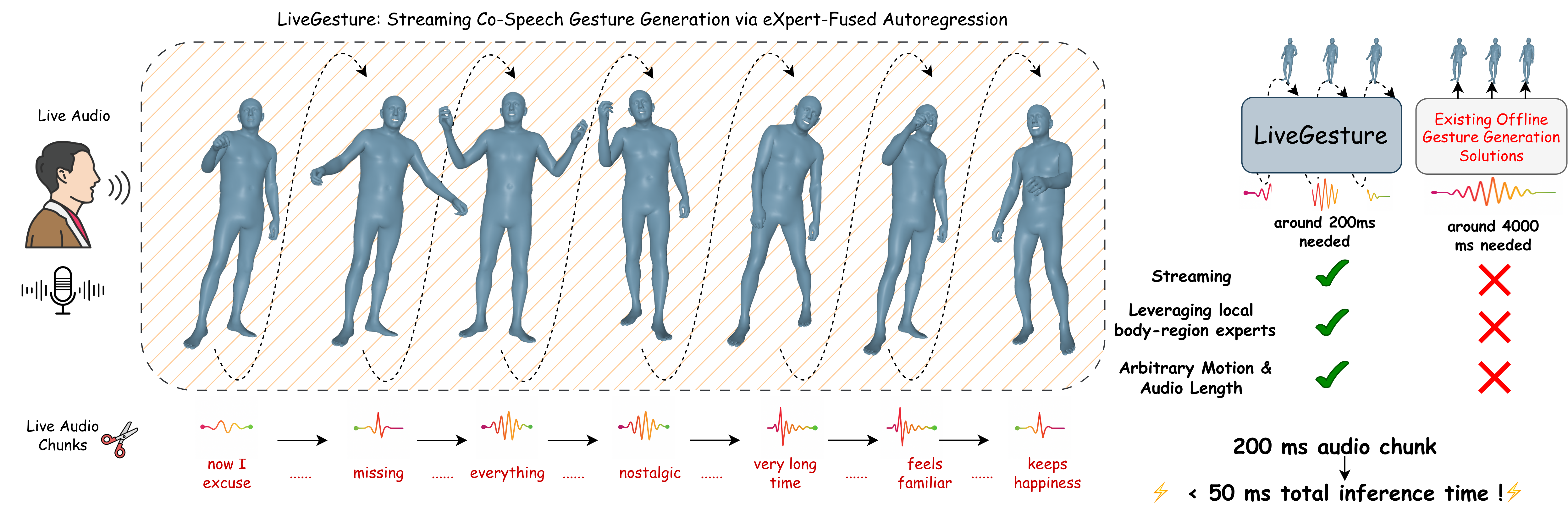

- Feb 2026: A paper on “LiveGesture: Streamable Co-Speech Gesture Generation Model” accepted at CVPR 2026!

- Nov 2025: “Monocular Models are Strong Learners for Multi-View Human Mesh Recovery” will be available on arXiv!

- Nov 2025: “Walk Before You Dance: High-fidelity and Editable Dance Synthesis via Generative Masked Motion Prior” was accepted to AAAI 2026!

- October 2025: Joined Google as Research Scientist Intern in Extended Reality (AR/VR) Team!

- October 2025: MaskControl paper selected for Oral Presentation and 🏆 Award Candidate at ICCV 2025!

- Aug 2025: Available for Research Scientist / Engineer oppertunities. Please reach out if there’s a good match.

- July 2025: Poster selected at Amazon WWAS Science Fair Seattle, presented next-gen multimodal shopping demo to VPs!

- June 2025: Joined Amazon as Applied Scientist II Intern

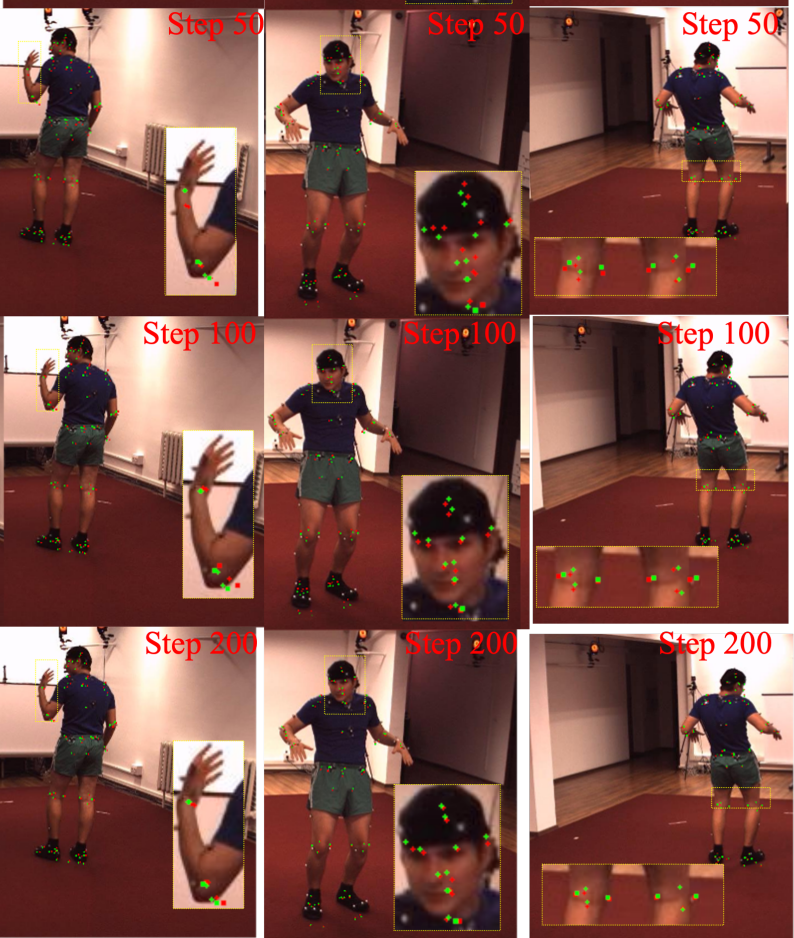

- June 2025: A paper on “MaskHand: Generative Masked Modeling for Robust Hand Mesh Reconstruction in the Wild” is accepted to ICCV 2025!

- June 2025: A paper on “Spatio-Temporal Control for Masked Motion Synthesis” is accepted to ICCV 2025 (Oral)!

- April 2025: “Walk Before You Dance: High-fidelity and Editable Dance Synthesis via Generative Masked Motion Prior” is now available on arXiv.

- Dec 2024: “GenHMR: Generative Human Mesh Recovery” was accepted to AAAI 2025, presented in Philadelphia, and received a travel award.

- Oct 2024: “BioPose: Biomechanically-Accurate 3D Pose Estimation from Monocular Videos” is accepted to WACV 2025!

- July 2024: “BAMM: Bidirectional Autoregressive Motion Model” is accepted to ECCV 2024!

- Sept 2023: Joined Lowe’s as Research Lead of the Computer Vision UNCC Team

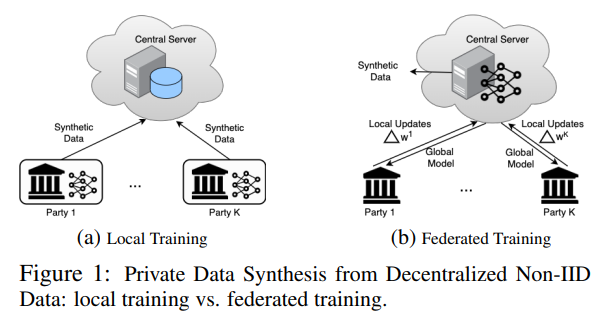

- June 2023: “Private Data Synthesis from Decentralized Non-IID Data” accepted to IJCNN 2023, presented in Queensland, Australia, and received a $5500 travel grant!

- April 2023: Presented at the SIAM International Conference on Data Mining (SDM’23) Doctoral Forum; awarded NSF $1400 travel grant.

- July 2022: “Privacy Enhancement for Cloud-Based Few-Shot Learning” accepted to IJCNN 2022!

- Jan 2022: “DP-Shield: Face Obfuscation with Differential Privacy” accepted to EDBT 2022!